|

|

2 years ago | |

|---|---|---|

| main_files | 2 years ago | |

| Readme.md | 2 years ago | |

| main.ipynb | 2 years ago | |

| mnist_test.csv | 2 years ago | |

| mnist_train.csv | 2 years ago | |

Readme.md

Introduction

The present session will introduce the Python classes and how to create your own Artificial Neural Network (ANN) class. The class will implement the __init__, feedforward, and backpropagation methods.

Each method will be described in detail to understand the ANN’s inner work. Then, the handwriting MNIST dataset will be used to train and test our ANN.

Objectives

- Learn the basics of using Python Classes

- Create methods for: create, feedforward, and backpropagation

- Train the ANN

- Test the ANN

Classes and methods

A class defines the structure, data and methods that an object will have. There is possible to have public and private variables to operate in the methods.

- Argument

- Initialitation

- Methods

- Destroy

class Dog:

# init method

def __init__(self, dogName, dogAge):

self.name = dogName

self.age = dogAge

pass

def status(self):

print("The dog's name is: ", self.name)

print("The dog's age is: ", self.age)

dog1 = Dog("firulais", 8)

dog2 = Dog("Perro", 2)

dog3 = Dog("cuadrado", 5)

dog3.status()

The dog's name is: cuadrado

The dog's age is: 5

ANN class implementation

The next chunk of code defines the Neural Network's basic structure. We are going to implement and define the methods one at time to understand them in a better way.

class Neurona:

# init

def __init__():

pass

# feedforward (cálculo)

def feedforward():

pass

# backpropagation (entrenamiento)

def backpropagation():

pass

Initialization or creation Method

Let’s begin with the initialization. We know we need to set the number of input, hidden and output layer nodes. That defines the shape and size of the neural network. Thus, we’ll let them be set when a new neural network object is created by using the class' parameters. That way we retain the choice to create new neural networks of different sizes with simple methods.

A good programmers, computer scientists and mathematicians, try to create more general code rather than specific code. It is a good habit, because it forces us to think about solving problems in a deeper and more general way. This means that our code can be used in more general scenarios.

Then, let us see how our code should look like:

import numpy as np

class Neurona:

# init

def __init__(self, inputNodes, hiddenNodes, outputNodes):

self.inN = inputNodes

self.hN = hiddenNodes

self.outN = outputNodes

##

## W = w11 w21

self.wih = np.random.rand(self.hN, self.inN)

self.who = np.random.rand(self.outN, self.hN)

pass

# feedforward (cálculo)

def feedforward():

pass

# backpropagation (entrenamiento)

def backpropagation():

pass

At this point were are only creating an object, but the class can't do any useful yet. Also, this is a good technique to start coding somethig, by keeping it small at the begining (make commits), and then grow the methods.

Next, we should add more code to allow our ANN class finish its initialization by creating the weight matrixes.

Feedfordward method

So the next step is to create the network of nodes and links. The most important part of the network is the link weights. They’re used to calculate the signal being fed forward, the error as it’s propagated backwards, and it is the link weights themselves that are refined in an attempt to to improve the network.

For the basic NN, the weight matrix consist of:

- A matrix that links the input and hidden layers,

Wih, of size hidden nodes by input nodes (hn×in) - and another matrix for the links between the hidden and output layers,

Who, of sizeon×hn(output nodes by hidden nodes)

X_h=W_{ih}IO_h=\sigma{X_h}So the next step is to create the network of nodes and links. The most important part of the network is the link weights. They’re used to calculate the signal being fed forward, the error as it’s propagated backwards, and it is the link weights themselves that are refined in an attempt to to improve the network.

For the basic NN, the weight matrix consist of:

- A matrix that links the input and hidden layers,

Wih, of size hidden nodes by input nodes (hn×in) - and another matrix for the links between the hidden and output layers,

Who, of sizeon×hn(output nodes by hidden nodes)

X_h=W_{ih}IO_h=\sigma{X_h}Backpropagation

\frac{\partial E}{\partial w*{jk}}= -e_j\cdot \sigma\left(\sum_i w*{ij} o*i\right) \left(1-\sigma\left(\sum_i w*{ij} o_i\right) \right) o_i import numpy as np

class Neurona:

# init

def __init__(self, inputNodes, hiddenNodes, outputNodes):

self.inN = inputNodes

self.hN = hiddenNodes

self.outN = outputNodes

##

## W = w11 w21

self.wih = np.random.rand(self.hN, self.inN)-0.5

self.who = np.random.rand(self.outN, self.hN)-0.5

pass

# feedforward (cálculo)

def feedforward(self, Inputs):

#Xh

self.inputs = np.array(Inputs, ndmin=2).T

self.Xh = np.dot(self.wih, self.inputs)

self.af = lambda x:1/(1+np.exp(-x))

#Oh

self.Oh = self.af(self.Xh)

#Xo

self.Xo = np.dot(self.who, self.Oh)

#Oh

self.Oo = self.af(self.Xo)

#Oo

pass

# backpropagation (entrenamiento)

def backpropagation(self, Inputs, Targets, Learning):

lr = Learning

self.inputs = np.array(Inputs, ndmin=2).T

self.targets = np.array(Targets, ndmin=2).T

#Xh

self.Xh = np.dot(self.wih, self.inputs)

self.af = lambda x:1/(1+np.exp(-x))

#Oh

self.Oh = self.af(self.Xh)

#Xo

self.Xo = np.dot(self.who, self.Oh)

#Oh

self.Oo = self.af(self.Xo)

#Oo

# output error

oe = self.targets-self.Oo

he = np.dot(self.who.T, oe)

self.who=self.who+lr*np.dot(oe*self.Oo*(1-self.Oo), self.Oh.T)

self.wih=self.wih+lr*np.dot(he*self.Oh*(1-self.Oh), self.inputs.T)

pass

mynn = Neurona(3,5,3)

mynn.backpropagation([0.21, 0.39, 0.87], [0.12, 0.10, 0.99], 0.3)

mynn.who

array([[0.89962766, 0.94344371, 0.25520613, 0.50403018, 0.79841922],

[0.46742312, 0.8497042 , 0.72150451, 0.4832481 , 0.1614701 ],

[0.07348318, 0.74125584, 0.96294501, 0.6829789 , 0.99122929]])

mynn.backpropagation([0.21, 0.39, 0.87], [0.12, 0.10, 0.99], 0.3)

mynn.who

array([[0.88622924, 0.92904876, 0.24001207, 0.48936863, 0.78475758],

[0.45035738, 0.83136916, 0.70215163, 0.46457349, 0.14406909],

[0.07510425, 0.74299748, 0.96478333, 0.68475279, 0.9928822 ]])

Mnist database

data_file = open("mnist_train.csv", 'r')

data_list = data_file.readlines()

data_file.close

data_list

Output ommited due to its length

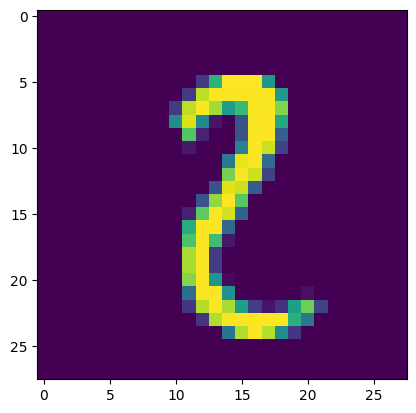

import numpy as np

import matplotlib.pyplot as plt

values = data_list[30600].split(',')

image = np.asfarray(values[1:]).reshape((28,28))

plt.imshow(image)

plt.show()

values[0]

'1'

Training

# hyper-parameters:

inputNodes = 784

hiddenNodes = 100

outputNodes = 10

learningRate = 0.1

myANN = Neurona(inputNodes, hiddenNodes, outputNodes)

myANN.wih[:,0]

array([ 3.80810137e-01, -2.76021452e-01, 1.89578510e-01, -4.40622523e-01,

4.49345620e-04, -2.99576867e-02, 2.88898176e-01, 1.00257001e-01,

-1.25427321e-01, -4.10841382e-01, 1.01058830e-01, -4.19682107e-01,

-2.61884751e-01, -4.86639132e-01, -4.10475994e-01, 4.72554845e-01,

-2.58545906e-01, 2.12843730e-01, 4.77632343e-01, 4.85691685e-01,

-2.21585439e-01, 1.43760970e-01, -2.23361202e-01, -3.69871226e-01,

-1.21973032e-01, -4.29052035e-01, -3.97413451e-01, 4.65864914e-01,

-1.26186271e-01, 2.07401026e-01, 1.05937271e-01, 1.46875776e-01,

2.95015245e-01, 3.43457017e-02, -3.29510246e-01, -2.48072947e-01,

-3.64935302e-01, 3.09460892e-01, -5.01871329e-02, 2.98023264e-01,

-3.19341252e-01, -3.90225500e-02, 3.10060197e-01, -3.13901381e-01,

3.69558936e-01, -1.38918625e-01, -4.78037558e-01, 9.24705861e-02,

-1.34122723e-01, -8.70299561e-02, -3.93637460e-02, 3.81876093e-01,

-8.53474718e-02, -1.29582776e-01, -4.02245397e-01, -4.56054710e-01,

2.64854223e-02, -1.53704117e-01, 1.90609293e-01, 3.62048289e-01,

-6.25482544e-02, 3.82745274e-01, 1.43009716e-01, 1.75700493e-01,

4.09349632e-01, -4.89451563e-01, -9.27621754e-02, 1.41559919e-01,

1.34585537e-01, -2.86828229e-01, 3.44307471e-01, -4.98223666e-01,

-4.05137857e-01, 1.81890913e-01, -4.57908080e-01, 4.87169160e-01,

-2.11761055e-01, 3.18985378e-02, -3.00127711e-01, 4.80807855e-01,

-2.70966977e-01, 2.36741392e-02, -3.03853361e-01, -4.29846112e-02,

5.33828969e-02, 2.18366071e-01, 2.13736555e-01, -1.15094147e-01,

-2.41327435e-02, 3.40917056e-01, 3.76097667e-02, -5.77225150e-03,

-3.83882298e-01, -2.31975044e-01, 2.44874629e-01, 7.90940279e-02,

-2.92400249e-01, 4.53702317e-01, 8.09984361e-02, -4.93878547e-02])

epoch = 1

for e in range(epoch):

for record in data_list:

values = record.split(',')

inputData = (np.asfarray(values[1:])/255*0.99) +0.01

target = np.zeros(outputNodes)+0.01

target[int(values[0])] = 0.99

myANN.backpropagation(inputData, target, learningRate)

pass

pass

len(data_list)

49999

values = data_list[30600].split(',')

inputData = (np.asfarray(values[1:])/255*0.99) +0.01

myANN.feedforward(inputData)

myANN.Oo

array([[2.42193187e-02],

[1.11760730e-01],

[5.09994337e-01],

[4.44172963e-02],

[3.37977872e-04],

[3.97805262e-03],

[1.06099919e-03],

[3.15328122e-02],

[1.33289159e-01],

[2.44129745e-03]])